DevOps teams don’t set out to build debt-ridden systems. It’s just something that happens over time:

- You ship something fast under a deadline and promise to clean it up later

- You make changes in the cloud console because it’s faster than getting your OpenTofu/Terraform PR reviewed

- You inherit scripts from someone who left, and they mostly work, so you leave them alone

Each shortcut adds friction. Over time, testing becomes harder, rollbacks riskier, and no one knows which environment actually reflects reality. This is the path toward DevOps bankruptcy.

You know you’ve reached full-on bankruptcy when:

- Changes require tribal knowledge to implement

- Nobody can confidently inventory production resources

- There’s no reproducibility across environments

- Code is full of inconsistent naming conventions, outdated TODOs, and scripts from four years ago

- Even small tickets feel risky to touch

The longer it goes on, the more expensive it gets — both financially and operationally. Unplanned infrastructure work doesn’t show up on your roadmap, but it eats your budget. This is not uncommon. In fact, outages cost enterprises over $300,000 per hour, and for the Global 2000, annual downtime losses hover around $400 billion. Beyond the financial costs, 68% of tech workers cite burnout as the number one reason they leave. In DevOps, 41% of teams say burnout is a top challenge.

Thankfully, there’s a way out.

A 5-step program to escape DevOps bankruptcy

When the status quo becomes too painful to ignore, you can’t just add another patch or hire another engineer. What you need is a structured way to rebuild confidence, one step at a time.

1. Quantify the drag

You can’t fix what you can’t see. Track how much time your team spends on unplanned infrastructure work. Measure deployment frequency, lead time for changes, failure rates, and mean time to recover (MTTR). Use those metrics to establish a baseline of performance for your team.

Don’t have any expectations for these numbers at first. Focus on direction: Is your team getting more or less reactive? Are outages eating more of your time? Is progress moving in the right direction?

It’s only once you’ve established a baseline that you can see the ROI of your efforts and make the case to the team to keep investing in debt paydown.

2. Eliminate single points of knowledge

No system should depend on a single person to understand it. If the answer to “how does this work?” is someone’s name, you’re one resignation away from major risk. Infrastructure knowledge should live in code, documentation, and reproducible workflows—not in someone’s head.

Start by documenting common workflows, codifying naming conventions, and standardizing on shared tools and patterns. This gets a lot easier when infrastructure is defined in code. With IaC, you can bake these conventions directly into reusable modules and make peer review part of the normal workflow. That way, your system enforces consistency instead of tribal memory.

3. Budget for the cleanup

Debt doesn’t disappear on its own. If you’re not investing time in cleanup, your future will look just like your past.

Set aside a fixed percentage of engineering time in every sprint for refactoring, replacing brittle components, and building toward long-term stability. Paying off infrastructure debt is the same as any other. It requires consistent, regular payments to get back in the black.

Budgeting for debt paydown also reduces burnout: it makes explicit space for tasks that otherwise would only ever be done in overtime by your best people.

4. Automate the routine

Manual work is expensive, error-prone, and hard to scale. Every time a developer has to click through the console, you’re introducing risk.

Left unchecked, it becomes the source of repeated errors, delayed releases, and unpredictable recoveries. Replacing manual steps with infrastructure as code (IaC), reusable modules, CI/CD pipelines, and policy-as-code is the fastest way to reduce deployment friction and improve system reliability.

If that feels like a lot of unfamiliar territory, don’t worry. Gruntwork’s docs walk through how to approach automation safely and incrementally, starting with the highest-impact areas.

You don’t need to automate everything at once. Start by documenting what’s manual today. Then sort by frequency, time cost, and risk. Look for high ROI work that gets repeated often or blocks other teams. Schedule those efforts alongside new development, and treat automation as part of your infrastructure hygiene.

The goal is steady reduction in toil, not overnight transformation.

5. Focus on outcomes, not motion

"Being busy" is the hallmark of a failing system. Focus on actions with meaningful outcomes:

- Implement a Toil Budget: Cap the amount of time your team is allowed to spend on manual, repetitive operational tasks. If you exceed the budget (e.g., 25% of a sprint), all new feature work stops until you automate enough toil away to get back under the limit.

- Enforce the "Two-Strikes" Rule: If the same manual intervention or production fire drill happens twice, it is immediately converted into a P1 engineering ticket for a permanent, automated solution. No exceptions.

Proof: what this looks like in the real world

A leading healthcare organization came to Gruntwork with a familiar problem: infrastructure was provisioned manually, environments were inconsistent, and only a handful of engineers understood how things worked. They had adopted OpenTofu/Terraform but without standardization or automation, deployments still required tribal knowledge and hours of manual validation.

We helped them:

- Adopt reusable IaC modules for core infrastructure

- Standardize CI/CD pipelines with built-in drift detection

- Define rollback strategies and eliminate one-off scripts

Within weeks, their platform team could spin up reproducible environments confidently. Deployments no longer relied on institutional memory. Knowledge became distributed, not hoarded. Team velocity increased (and so did morale).

This isn’t an isolated case. Whether you’re in healthcare, media, finance, or SaaS, high-friction infrastructure follows the same patterns and the solutions follow the same principles.

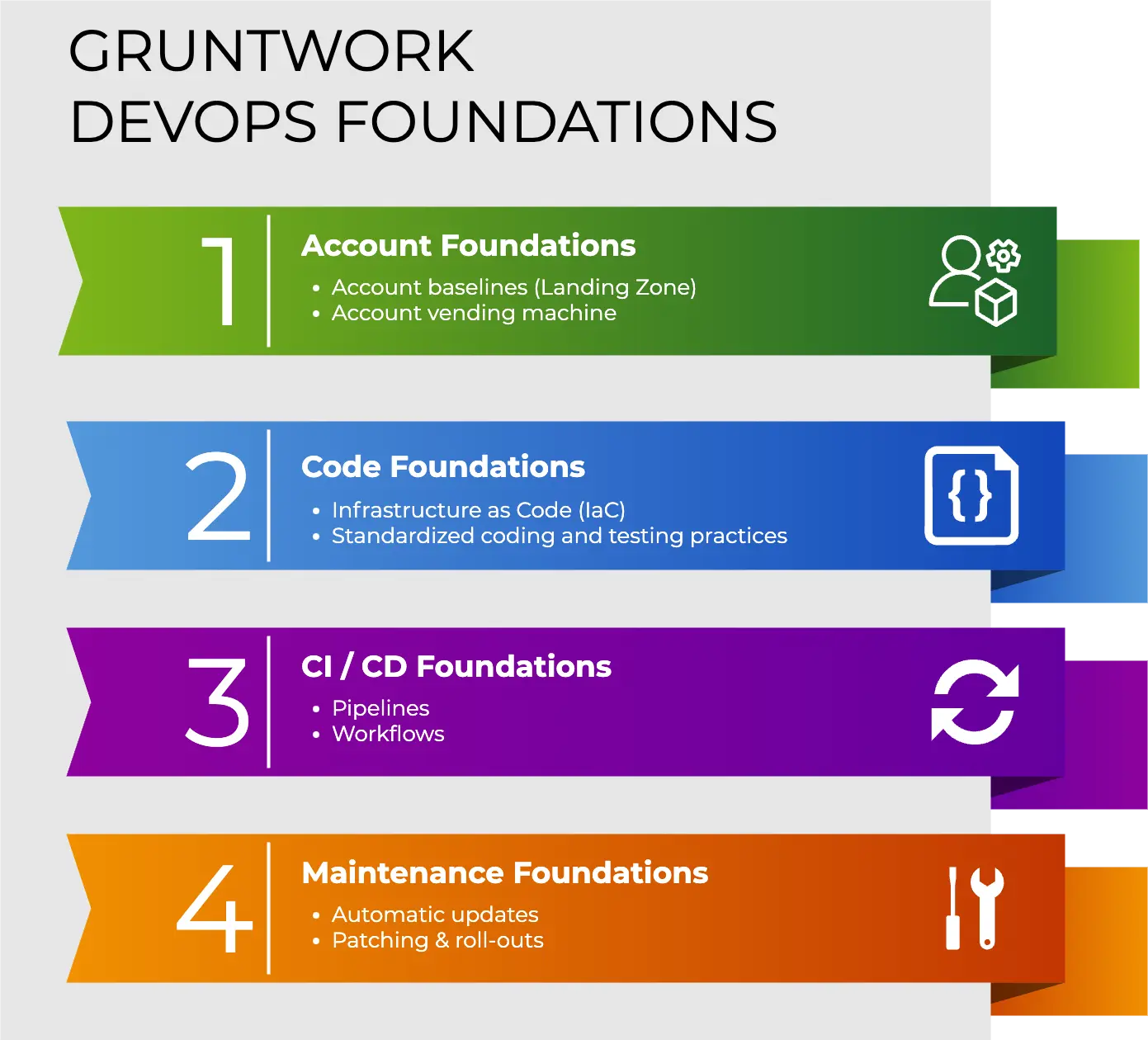

Versioned, repeatable, peer-reviewed infrastructure

Infrastructure as Code (IaC) addresses the core problem behind DevOps bankruptcy: the high cost of making changes. It reduces friction, improves reliability, and makes environments predictable.

When IaC is implemented well:

- Environments are created and destroyed consistently

- Rollbacks are rare and become painless code reverts, not all-hands incidents

- Changes go through peer review and policy enforcement

- On-call engineers don’t rely on shell history and tribal memory

To scale IaC across teams and environments, you need structure. Terragrunt gives you that structure: reusable, DRY patterns for organizing your OpenTofu/Terraform codebase, managing dependencies, and sharing modules across accounts. It supports everything from small teams to full-scale enterprise orgs. Terragrunt Scale goes a step further, giving you automation and collaboration tools to ensure your IaC is set up for enterprise-scale success.

Build once, scale calmly

DevOps bankruptcy tends to emerge from a series of high-friction choices: inconsistent infrastructure, unclear ownership, manual provisioning, and pipelines that are difficult to manage or debug. These issues reduce confidence in deployments and increase the risk of incidents. Over time, you end up taking on more and more technical debt until you’re approaching the point of bankruptcy: spending more time patching than building the future.

Infrastructure as Code (IaC), and tools like Terragrunt Scale, offer a more reliable way to manage infrastructure. With versioned, automated, and testable configurations, teams can create predictable environments and improve both delivery speed and operational stability.

Recovering from infrastructure debt takes ongoing effort. Teams that prioritize maintainability see fewer errors, shorter recovery times, and better alignment between engineering and business priorities.

Ready to get out of DevOps debt? If you're nodding along to this article, you're not alone. DevOps bankruptcy is a tough place to be, but it doesn’t have to be a permanent state. Let us help assess your current state and map out your path back to productivity.

- No-nonsense DevOps insights

- Expert guidance

- Latest trends on IaC, automation, and DevOps

- Real-world best practices