Once a month, we send out a newsletter to all Gruntwork customers that describes all the updates we’ve made in the last month, news in the DevOps industry, and important security updates. Note that many of the links below go to private repos in the Gruntwork Infrastructure as Code Library and Reference Architecture that are only accessible to customers.

Hello Grunts,

We’ve got three major new releases to share with you in this newsletter! First, Gruntwork Pipelines, which you can use to create a secure, automated CI / CD pipeline for Terraform/Terragrunt code, with approval workflows and Slack notifications, using your CI server of choice. Second, Gruntwork AWS Landing Zone, which you can use to set up new AWS accounts (via AWS Organizations) and configure them with users, permissions, and guard rails (GuardDuty, CloudTrail, AWS Config, etc) in minutes. Third, the Gruntwork Store, where you can buy all sorts of Gruntwork and DevOps swag, such as t-shirts, hoodies, and coffee mugs. We’ve also made major updates to Terragrunt, lots of progress on supporting Helm 3, and a ton of other bug fixes and improvements.

On a more personal note, we are all doing our best to cope as COVID-19 (coronavirus) sweeps the world. Fortunately, Gruntwork has been a 100% distributed company since day 1, so we were already set up for working from home, and while we’re not exactly at 100% (who could be in times like these?), we are committed to continuing to work on our mission of making it 10x easier to understand, build, and deploy software. In fact, with everyone stuck at home and online all day, the software is becoming more important than ever in keeping us all connected, informed, productive, and entertained. We will continue chugging along as always, and we sincerely hope you’re all able to stay safe and get through this with us.

As always, if you have any questions or need help, email us at support@gruntwork.io!

Gruntwork Updates

[NEW RELEASE] Gruntwork Pipelines: CI / CD for Terraform and Terragrunt

Motivation: Many customers have been asking us what sort of workflow they should use with Terraform and Terragrunt. They wanted to know how to work together as a team, when to use terraform plan or Terratest, how to review Terraform code, and how to do Continuous Integration and Continuous Delivery (CI/CD) with infrastructure code. There are many solutions in this space, but most of them left a lot to be desired in terms of security, the ability to set up custom workflows, and support for tooling.

Solution: We’ve created a new solution called Gruntwork Pipelines! It allows you to set up a secure, automated CI / CD pipeline for Terraform and Terragrunt, that works with any CI server (e.g., Jenkins, GitLab, CircleCi), and supports approval workflows. Here’s a brief preview of the pipeline in action:

Check out the How to configure a production-grade CI/CD workflow for infrastructure code deployment guide for a longer video with sound, instructions on how to set up Gruntwork Pipelines, and detailed discussions of why you should use CI/CD, a typical CI/CD workflow, how to structure your infrastructure code, threat models around infrastructure CI/CD, what platforms to use to mitigate threats and more.

Under the hood, Gruntwork Pipelines consists of a set of modules and tools that help with implementing a secure, production-grade CI/CD pipeline for infrastructure code, based on the design covered in the deployment guide. All the modules are available in the module-ci repository, and include the following:

- ecs-deploy-runner module: A module to create a Fargate enabled ECS task with a Lambda function frontend to trigger and run infrastructure code in an isolated environment.

- ecs-deploy-runner-invoke-iam-policy module: A module to create an IAM policy that provides the minimal permissions necessary to invoke the Lambda function to trigger deployments with the ECS Deploy Runner.

- infrastructure-deploy-script module: A Python script that provides a minimal interface for running Terraform or Terragrunt against infrastructure code stored in a remote repository.

- infrastructure-deployer module: A CLI utility for invoking the Lambda function to trigger deployments with the ECS Deploy Runner and stream the logs.

What to do about it: Check out our Production Deployment Guide, take it for a spin on your infrastructure code, and let us know what you think!

[NEW RELEASE] Gruntwork AWS Landing Zone

Motivation: Setting up AWS accounts for production is hard. You need to create multiple accounts and configure each one with a variety of authentication, access controls, and security features by using AWS Organizations, IAM Roles, IAM Users, IAM Groups, IAM Password Policies, Amazon GuardyDuty, AWS CloudTrail, AWS Config, and a whole lot more. There are a number of existing solutions on the market, but all have a number of limitations, and we’ve gotten lots of customer requests to offer something better.

Solution: We’re happy to announce Gruntwork’s AWS Landing Zone solution, which allows you to set up production-grade AWS accounts using AWS Organizations, and configure those accounts with a security baseline that includes IAM roles, IAM users, IAM groups, GuardDuty, CloudTrail, AWS Config, and more—all in a matter of minutes. Moreover, the entire solution is defined as code, so you can fully customize it to your needs.

The new code lives in the module-security repo of the Infrastructure as Code Library and includes:

account-baseline-root: A security baseline for configuring the root account (also known as the master account) of an AWS Organization including setting up child accounts.account-baseline-security: A security baseline for configuring the security account where all of your IAM users and IAM groups are defined.account-baseline-app: A security baseline for configuring accounts designed to run your Apps.

What to do about it: Check out our Gruntwork AWS Landing Zone announcement blog post for a quick walkthrough of how to use the account-baseline modules to set up your entire AWS account structure in minutes, and our updated Production Deployment Guide for the full details.

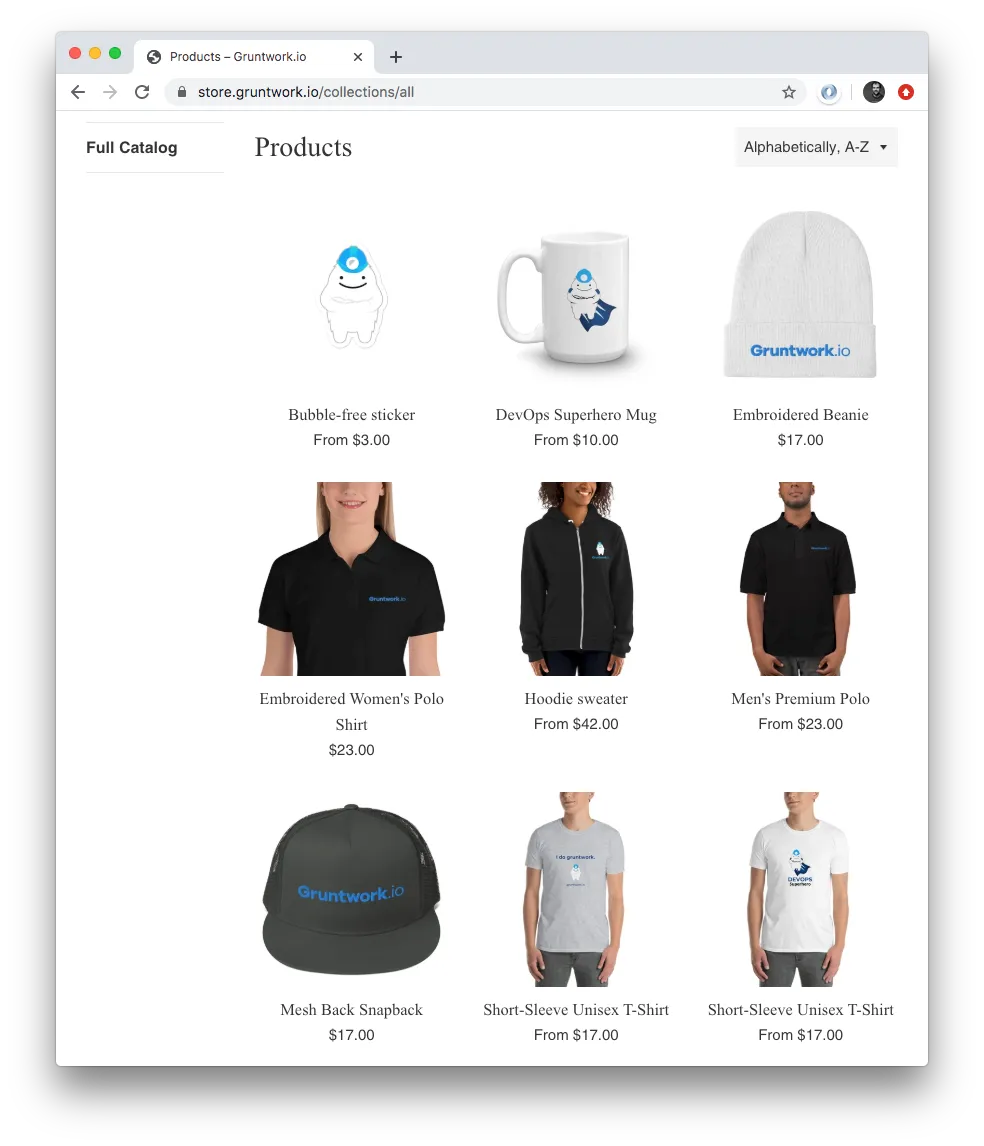

[NEW RELEASE] The Gruntwork Store

Motivation: We want to sport the finest apparel while looking incredible, and why would we keep that to ourselves?

Solution: We created a new Gruntwork Store with many new designs to choose from t-shirts, hoodies, coffee mugs, stickers, and more!

What to do about it: Check out the new Gruntwork Store to find your newest addition (to your closet!)

Terragrunt improvements: Code generation and read_terragrunt_config

Motivation: Many users of Terragrunt wanted to be able to use off-the-shelf modules, either from the Gruntwork Infrastructure as Code Library, or other repositories, without having to “wrap” those modules with their own code to add boilerplate code, such as provider or backend configurations. Users also wanted to know how to make their Terragrunt code more DRY by reusing parts of existing configurations, such as common variables.

Solution: This month we introduced two new features to Terragrunt that directly address the pain points of third party modules and config reusability:

- Code generation with

generateblocks:generateblocks allow you to generate arbitrary files in the Terragrunt working directory (whereterragruntcallsterraform). This allows you to dynamically generate a.tffile that includes the necessaryproviderandbackendcode to use off-the-shelf modules. See the updated documentation for more details. **read_terragrunt_confighelper function**: Theread_terragrunt_confighelper function allows you to parse and read in another terragrunt configuration file to reuse pieces of that config. For example, you can useread_terragrunt_configto load a common variable and use that to name your resources:name = read_terragrunt_config("prod.hcl").locals.foo. This allows for better code reuse across your terragrunt project. Check out the function documentation for more details.

These two functionalities in combination can lead to more DRY Terragrunt projects. To highlight this, we have updated our example code to take advantage of these features.

What to do about it: Upgrade to the latest terragrunt version, check out our example code, try out the new features, and let us know what you think!

Update on Helm 3 Support

Motivation: Helm 3.0.0 was released and became generally available in November of last year. This was a big release, addressing one of the biggest pain points of Helm by removing Tiller, the server side component. Since then, many tools have upgraded and adapted to the changes introduced, including the terraform provider which was updated last month. Now that all the tools have caught up, we are ready to start updating our library for compatibility.

Solution: We have begun to update many of our components to be compatible with Helm v3! While this is still a work in progress, many components have been adapted in the last month. Here is a list of tools and components in our library that are now compatible with Helm v3:

- Terratest: Terratest now works with Helm v3 starting as of v0.25.0. We also updated our blog post on using Terratest to test Helm Charts to use Helm v3 instead of v2.

- helm-kubernetes-services: The k8s-service chart in

helm-kubernetes-servicesis now tested with Helm v3, and known to work without any changes. - terraform-aws-eks: Starting with v0.16.0, the EKS administrative application modules (

eks-k8s-external-dns,eks-k8s-cluster-autoscaler,eks-cloudwatch-container-logs, andeks-alb-ingress-controller) have been updated to follow best practices with Helm v3. We have also updated our supporting services example to use the latest helm provider with Helm v3 as a reference on how to update your code.

Note that although many of our components now support Helm v3, we recommend holding off on updating Reference Architecture deployments until it has been officially updated with the new modules.

What to do about it: Try out the new modules to get a feel for the differences with Helm v3 and let us know what you think!

Open Source Updates

Terragrunt

- terragrunt, v0.21.12: There is now a special after hook

terragrunt-read-config, which is triggered immediately after terragrunt loads the config (terragrunt.hcl). You can use this to hook important processes that should be run as the first thing in your terragrunt pipeline. - terragrunt, v0.22.0: This release introduces the

generateblock and thegenerateattribute to theremote_stateblock. These features can be used to generate code/files in the Terragrunt working directory (where Terragrunt calls out to Terraform) prior to callingterraform. See the updated docs for more info. Also, see here for documentation ongenerateblocks and here for documentation on thegenerateattribute of theremote_stateblock. - terragrunt, v0.22.1: This release allows creating GCS buckets for state storage with the Bucket Policy Only attribute.

- terragrunt, v0.22.2: This release exposes a configuration to disable checksum computations for validating requests and responses. This can be used to workaround #1059.

- terragrunt, v0.22.3: This release introduces the

read_terragrunt_configfunction, which can be used to load and reference another terragrunt config. See the function documentation for more details. This release also fixes a bug with thegenerateblock wherecomment_prefixwas ignored. - terragrunt, v0.22.4: This fixes a bug in the behavior of

--terragrunt-include-dirwhere there was an inconsistency in when dependencies that were not directly in the included dirs were included. Now all dependencies of included dirs will consistently be included as stated in the docs. This release also introduced a new flag--terragrunt-strict-includewhich will ignore dependencies when processing included directories. - terragrunt, v0.22.5: Terrarunt will now respect the

external_idandsession_nameparameters in your S3 backend config. - terragrunt, v0.23.0: The

find_in_parent_foldersfunction will now return an absolute path rather than a relative path. This should make the function less error-prone to use, especially in situations where the working directory may change (e.g., due to.terragrunt-cache). - terragrunt, v0.23.2: Terragrunt will now always look for its config in

terragrunt.hclandterragrunt.hcl.jsonfiles.

Terratest

- terratest, v0.23.5: Add better support for tests against

minikube. Specifically,k8s.WaitUntilServiceAvailableEandk8s.GetServiceEndpointEnow properly handleLoadBalancerservice types onminikube. - terratest, v0.24.0: Starting this release, all the examples and automated testing in

terratesthas switched to using Terraform 0.12 series. As a result, we have dropped support for Terraform 0.11 series. If you are using Terraform 0.11, please use an older terratest version. - terratest, v0.24.1: This release introduces a new helper function for invoking AWS Lambda Functions:

aws.InvokeFunctionEandaws.InvokeFunction. - terratest, v0.25.0: Starting this release, the functions in the

helmmodule have been updated for Helm v3 compatibility. As a part of this, support for Helm v2 has been dropped. To upgrade to this release, you must update your CI pipelines to use Helm v3 instead of Helm v2 with Tiller. - terratest, v0.25.2: You can now configure the

WorkingDirandOutputMaxLineSizeparameters inpacker.Options. - terratest, v0.26.0: All Terratest methods now take in a

t testing.TestingTparameter instead of the Go nativet *testing.T.testing.TestingTis an interface that is identical to*testing.T, but with only the methods used by Terratest. That means you can continue passing in the native*testing.T, but now you can also use Terratest in a wider variety of contexts (e.g., with GinkgoT).

Other open-source updates

- terraform-aws-couchbase, v0.2.4: Updated the example Packer template to replace the deprecated

clean_ami_namefunction withclean_resource_name. - terraform-aws-vault, v0.13.5: Updated the example Packer template to use the

clean_resource_namefunction instead of the deprecatedclean_ami_namefunction. - terraform-aws-vault, v0.13.6: You can now use Vault with DynamoDB as a backend by specifying the

--enable-dynamo-backend,--dynamo-region, and--dynamo--tableparameters inrun-vaultand theenable_dynamo_backend,dynamo_table_name, anddynamo_table_regioninvault-cluster. - cloud-nuke, v0.1.14: Add support for

--resource-type rdsto nuke RDS Databases. They’ll also be nuked if you use without any filter. - terraform-aws-nomad, v0.5.2: The

nomad-security-group-rulesmodule now correctly handles the case whereallowed_inbound_cidr_blocksis an empty list. - kubergrunt, v0.5.10: This fixes a nondeterministic failure in retrieving the thumbprint, where depending on timing, you are likely to fail retrieval of the OIDC thumbprint when the EKS cluster is first launching.

- kubergrunt, v0.5.11: This fixes a bug in the

eks deploycommand where it did not handleLoadBalancerServices that are internal.

Other updates

module-ci

- module-ci, v0.16.5: The modules under

iam-policiesnow allow you to set thecreate_resourcesparameter tofalseto have the module not create any resources. This is a workaround for Terraform not supporting thecountparameter onmodule { ... }blocks. - module-ci, v0.17.0: Support for the ECS Deploy Runner stack.

- module-ci, v0.18.0: Made several updates to the

jenkins-servermodule: expose a newuser_data_base64input variable that allows you to pass in Base64-encoded User Data (e.g., such as a gzipped cloud-init script); fixed deprecation warnings with the ALB listener rules; updated the version of thealbmodule used under the hood, which no longer sets theEnvironmenttag on the load balancer. - module-ci, v0.18.1: You can now configure the health check max retries and time between retries for Jenkins using the new input variables

deployment_health_check_max_retriesanddeployment_health_check_retry_interval_in_seconds, respectively. Changed the default settings to be ten minutes' worth of retries instead of one hour. - module-ci, v0.18.2: Add support for Mac OSX to the

git-updated-foldersscript. - module-ci, v0.18.3: This release fixes a bug in the

infrastructure-deployerCLI where it did not handle task start failures correctly. - module-ci, v0.18.4: This release updates the

terraform-update-variablesscript to run Terraform in the same folder as the updated vars file when formatting the code.

terraform-aws-eks

- terraform-aws-eks, v0.14.0: The

eks-cluster-control-planenow supports specifying a CIDR block to restrict access to the public Kubernetes API endpoint. Note that this is only used for the public endpoint: you cannot restrict access by CIDR for the private endpoint yet. - terraform-aws-eks, v0.15.0: The IAM Role for Service Accounts (IRSA) input variables for the application modules (

eks-k8s-external-dns,eks-k8s-cluster-autoscaler,eks-cloudwatch-container-logs, andeks-alb-ingress-controller) are now required. Previously, we defaulteduse_iam_role_for_service_accountsto true, but this meant that you needed to provide two required variableseks_openid_connect_provider_arnandeks_openid_connect_provider_url. However, these had defaults of empty string and do not cause an error in the terraform config, which means that you would have a successful deployment even if they weren't set. Starting this release the IRSA input variables have been consolidated to a single required variableiam_role_for_service_accounts_config. - terraform-aws-eks, v0.15.1: The

clean_up_cluster_resourcesscript now cleans up residual security groups from the ALB ingress controller. - terraform-aws-eks, v0.15.2: The

eks-cloudwatch-container-logsmodule now deploys a newer version of the fluentd container that supports IRSA. - terraform-aws-eks, v0.15.3: This release introduces support for setting encryption configurations on your EKS cluster to implement envelope encryption of Secrets. Refer to the official AWS technical blog post for more information.

- terraform-aws-eks, v0.15.4:

eks-cluster-workersnow supports attaching secondary security groups in addition to the one created internally. This is useful to break cyclic dependencies between modules when setting up ELBs. - terraform-aws-eks, v0.15.5: You can now use

cloud-initfor boot scripts for self-managed workers by providing it asuser_data_base64. - terraform-aws-eks, v0.16.0: This release introduces Helm v3 compatibility for the EKS administrative application modules,

eks-k8s-external-dns,eks-k8s-cluster-autoscaler,eks-cloudwatch-container-logs, andeks-alb-ingress-controller. The major difference between this release and previous releases is that we no longer are creating theServiceAccountsin terraform and instead rely on the Helm charts to create theServiceAccounts. Refer to the Migration Guide in the release notes for information on how to migrate to this version. - terraform-aws-eks, v0.16.1: Fix an issue with the helm provider where the

stablehelm repository does not refresh correctly in certain circumstances. - terraform-aws-eks, v0.17.0: This release adds support for Kubernetes

1.15and drops support for1.12.

module-data-storage

- module-data-storage, v0.11.4: You can now limit the Availability Zones the

rdsmodule uses for replicas via theallowed_replica_zonesparameter. - module-data-storage, v0.11.5: You can now configure backtracking (in-place, destructive rollback to a previous point-in-time) on Aurora clusters using the

backtrack_windowvariable. - module-data-storage, v0.12.0: Allow specifying the Certificate Authority (CA) bundle to use in the

auroramodule via theca_cert_identifierinput variable. Update theca_cert_identifierinput variable in therdsmodule to set the default tonullinstead of hard-coding it tords-ca-2019. - module-data-storage, v0.12.1: Add the ability to enable

deletion_protectionin therdsmodule. - module-data-storage, v0.12.2: Add the ability to enable Performance Insights in the

rdsmodule. Also addedcopy_tags_to_snapshotsupport to therdsandauroramodules. - module-data-storage, v0.12.3: Make

var.allow_connections_from_cidr_blocksoptional. - module-data-storage, v0.12.4: This release fixes a bug where the lambda function for creating a snapshot needed the ability to invoke itself for retry logic.

- module-data-storage, v0.12.5: The lambda functions for snapshot management have been upgraded to the python3.7 runtime.

- module-data-storage, v0.12.6:

lambda-create-snapshotandlambda-cleanup-snapshotsnow support namespacing snapshots so that you can differentiate between snapshots created with different schedules. Take a look at the lambda-rds-snapshot-multiple-schedules example for an example of how to use this feature to manage daily and weekly snapshots. - module-data-storage, v0.12.7: Fix log message for lambda function in

lambda-create-snapshotto show what cloudwatch metric was updated. - module-data-storage, v0.12.8: Add support for choose the time window for Aurora Cluster Instances.

- module-data-storage, v0.12.9: Each of the manual scheduled snapshot Lambda function modules now expose an input variable

create_resourcesto allow conditionally turning them off. - module-data-storage, v0.12.10: The

rdsmodule now allows you to enable IAM authentication for your database.

module-ecs

- module-ecs, v0.17.0: This release introduces support for ECS capacity providers in the

ecs-servicemodule. This allows you to provide a strategy for how to run the ECS tasks of the service, such as distributing the load between Fargate, and Fargate Spot. - module-ecs, v0.17.1: This update adds tags for ECS services and task definitions. To add a tag to a service, provide a map with the

service_tagsvariable. Refer to the release notes for more details. - module-ecs, v0.17.2: The

ecs-servicemodule now exposestask_role_permissions_boundary_arnandtask_execution_role_permissions_boundary_arninput parameters that can be used to set permission boundaries on the IAM roles created by this module. - module-ecs, v0.17.3: Add

logs:CreateLogGroupto the IAM permissions for the ECS task execution role. This is necessary for ECS to create a new log group if the configured log group does not already exist. - module-ecs, v0.18.0: Introduces two new list variables:

allow_ssh_from_cidr_blocksandallow_ssh_from_security_group_ids. Use these lists to configure more flexible SSH access.

module-security

- module-security, v0.23.0: This release introduces a new module

aws-config-multi-regionwhich can be used to configure AWS Config in multiple regions of an account. There are also major changes to the GuardDuty modules. Refer to the release notes for more details. - module-security, v0.23.1: Address the issue of perpetual diff with AWS Organization child account property

iam_user_access_to_billing. - module-security, v0.23.2: This release enhanced a few internal tools that are used for maintaining the multi-region modules. No changes were made to the terraform modules in this release.

- module-security, v0.23.3: This release fixes a bug where the cloudtrail module sometimes fails due to not being able to see the IAM role that grants access to CloudWatch Logs.

- module-security, v0.24.0: The

kms-master-keymodule now exposes acustomer_master_key_specvariable that allows you to specify whether the key contains a symmetric key or an asymmetric key pair and the encryption algorithms or signing algorithms that the key supports. The module now also grantskms:GetPublicKeypermissions, which is why this release was marked as "backward incompatible." - module-security, v0.25.1: This release fixes a regression in the

fail2banmodule that prevented it from starting up on Amazon Linux 2. - module-security, v0.26.0: The

iam_groupsmodule no longer accepts theaws_account_idandaws_regioninput variables. - module-security, v0.26.1: Previously, users with read-only permissions could not filter by resource group names in the CloudWatch console. This release grants additional IAM permissions to allow filtering.

- module-security, v0.27.0: This release introduces support for managing more than one KMS Customer Master Key (CMK) using the

kms-master-keymodule. - module-security, v0.27.1: Fix a bug where some of our install scripts were missing

DEBIAN_FRONTEND=noninteractiveon theapt-get updatecalls. As a result, certain updates (such astzdata) would occasionally try to request an interactive prompt, which would freeze or break Packer or Docker builds.

module-aws-monitoring

- module-aws-monitoring, v0.16.0: The

run-cloudwatch-logs-agent.shno longer takes in a--vpc-nameparameter, which was only used to set a log group name if--log-group-namewas not passed in. The--log-group-nameis now required, which is simpler and makes the intent clearer. - module-aws-monitoring, v0.17.0: All the modules under

alarmsnow expose acreate_resourcesparameter that you can set tofalseto disable the module so it creates no resources. This is a workaround for Terraform not supportingcountorfor_eachonmodule. - module-aws-monitoring, v0.18.0: The

cloudwatch-memory-disk-metricsmodule now creates and sets up a new OS usercwmonitoringto run the monitoring scripts as. Previously this was using the user who was callinggruntwork-install, which is typically the default user for the cloud (e.gubuntufor ubuntu andec2-userfor Amazon Linux). You can control which user to use by setting the module parametercron-user. - module-aws-monitoring, v0.18.1: Correct the docs and usage instructions for the

cloudwatch-log-aggregation-scriptsmodule to correctly indicate that--log-group-nameis required. - module-aws-monitoring, v0.18.2: Fix bug in the

run-cloudwatch-logs-agent.shwhere the first argument passed to--extra-log-fileswas being skipped. - module-aws-monitoring, v0.18.3: Added a

create_resourcesinput variable tocloudwatch-custom-metrics-iam-policyso you can turn the module on and off (this is a workaround for Terraform not supportingcountinmodule). - module-aws-monitoring, v0.19.0: Remove unused variables

aws_account_idandaws_region.

CIS Compliance

- cis-compliance-aws, v0.4.0:

aws-configis now a general subscription module and has been migrated tomodule-securityunder the nameaws-config-multi-regionstarting with version v0.23.0. - CIS Reference Architecture IAM Fix: Previously our CIS Reference Architecture was missing the cross account IAM role configuration to allow access to assume the

allow-ops-admin-access-from-external-accountsIAM role to the child AWS accounts. This meant that you could not run any of the terragrunt and terraform modules in those accounts since there was no IAM role with enough permissions to run a deployment. The change set in this release can be applied to your version of the reference architecture to create the necessary cross account IAM permissions to allow access to the ops-admin roles. - CIS Reference Architecture AWS Config Update: The reference architecture has been updated to use

aws-config-multi-regioninmodule-securityinstead ofaws-configincis-compliance-aws.

Other packages

- package-openvpn, v0.9.9: You can now configure a custom MTU for OpenVPN to use via the

--link-mtuparameter. - package-openvpn, v0.9.10: You can now restrict the CIDR blocks that are allowed to access the OpenVPN port with the variable

allow_vpn_from_cidr_list. - package-elk, v0.5.1: The default lambda engine for the

elasticsearch-cluster-restoreandelasticsearch-cluster-backupmodules have been updated tonodejs10.x. - module-cache, v0.9.1: Add count to

var.allow_connections_from_cidr_blocks. - module-load-balancer, v0.17.0: The

albmodule no longer exposes anenvironment_nameinput variable. This variable was solely used to set anEnvironmenttag on the load balancer. - module-load-balancer, v0.18.0: The

albmodule no longer accepts theaws_account_idandaws_regioninput variables. - module-load-balancer, v0.18.1: Fix deprecation warning with

destroyprovisioner. - module-asg, v0.8.4: The

server-groupmodule now exposes a newuser_data_base64parameter that you can use to pass in Base64-encoded data (e.g., gzipped cloud-init script). - module-asg, v0.8.6: You can now configure the CloudWatch metrics to enable for the ASGs in the

server-groupmodule via the newenabled_metricsinput variable. - module-vpc v0.8.2: Fixed issue where reducing

num_availability_zoneswas producingError updating VPC Endpoint: InvalidRouteTableId.NotFound. Add parametercreate_resourcesfor VPC Flow Logs to allow to skip them on Reference Architecture. - module-vpc v0.8.3: adds the

icmp_typeandicmp_codevariables to the network ACL modules, allowing you to specify ICMP rules. - module-server, v0.8.0: The

single-servermodule now allows you to add custom security group IDs to using theadditional_security_group_idsinput variable. The parameters that control SSH access in thesingle-servermodule have been refactored. Thesource_ami_filterwe were using to find the latest CentOS AMI in Packer templates started to pick up the wrong AMI, probably due to some change in the AWS Marketplace. We've updated our filter to fix this as described below. - package-lambda, v0.7.4: The

lambdaandscheduled-lambda-jobmodules now support conditionally turning off resources in the module using thecreate_resourcesinput parameter. - package-static-assets, v0.6.0: Instead of supporting solely 404 and 500 error responses, now that we have Terraform 0.12, the

s3-cloudfrontmodule can now take in a dynamic list of error responses using the newerror_responsesinput parameter, which allows you to specify custom error responses for any 4xx and 5xx error. - package-static-assets, v0.6.1: Fix a bug in

s3-static-websitemodule with versions of terraform >0.12.11, where the output calculation fails with an error.

DevOps News

50% price reduction for EKS

What happened:**** AWS has reduced the price of EKS by 50%.

Why it matters: AWS used to change $0.20 per hour for running a managed Kubernetes control plane. This cost is now $0.10 per hour, which makes it more affordable for a wide variety of use cases.

What to do about it: This change is live, so enjoy the lower AWS bill in coming months.

AWS CLI v2 now available

What happened: AWS CLI version 2 (v2) is now GA (“generally available”).

Why it matters: The new CLI offers far better integration with AWS SSO, as well as many UI/UX improvements, such as wizards, auto complete, and even server-side auto complete (i.e., fetching live data via API calls for auto complete).

What to do about it: Check out the install instructions, migration guide, and give it a short!

Security Updates

Below is a list of critical security updates that may impact your services. We notify Gruntwork customers of these vulnerabilities as soon as we know of them via the Gruntwork Security Alerts mailing list. It is up to you to scan this list and decide which of these apply and what to do about them, but most of these are severe vulnerabilities, and we recommend patching them ASAP.

Ubuntu Security Notice USN-4263–1: Sudo Vulnerability

Sudo could allow unintended access to the administrator account. Affected versions include Ubuntu 19.10, Ubuntu 18.04 LTS, Ubuntu 16.04 LTS. We recommend updating your sudo and sudo-ldap packages to the latest versions. More information: https://usn.ubuntu.com/4263-1.

Ubuntu Security Notice USN-4294–1: OpenSMTPD vulnerabilities

It was discovered that OpenSMTPD mishandled certain input. A remote, unauthenticated attacker could use this vulnerability to execute arbitrary shell commands as any non-root user. (CVE-2020–8794) More information: https://usn.ubuntu.com/4294-1.

- No-nonsense DevOps insights

- Expert guidance

- Latest trends on IaC, automation, and DevOps

- Real-world best practices